一 案例背景

从生产控制台上看到一条下述SQL:

格式化之后SQL语句为:

select '01' status, count(1) from t_ai_prd_item where status = '01' and deleted = false union all select '02' status, count(1) from t_ai_prd_item where status = '02' and deleted = false

一个union all的联合查询,每次执行耗时1秒。有没有优化余地呢?

二 优化分析

下述通过copy原表t_ai_prd_item为t1,来进行分析优化。

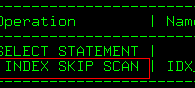

1 看SQL执行计划及数据量分布:

ai=> explain analyze select '01' status, count(1) from t1 where status = '01' and deleted = false

ai-> union all

ai-> select '02' status, count(1) from t1 where status = '02' and deleted = false;

QUERY PLAN

-----------------------------------------------------------------------------------------------------------------------------

Append (cost=160483.13..320974.20 rows=2 width=40) (actual time=5211.374..6250.940 rows=2 loops=1)

-> Aggregate (cost=160483.13..160483.14 rows=1 width=0) (actual time=5211.374..5211.374 rows=1 loops=1)

-> Seq Scan on t1 (cost=0.00..160483.12 rows=1 width=0) (actual time=5211.369..5211.369 rows=0 loops=1)

Filter: ((NOT deleted) AND ((status)::text = '01'::text))

Rows Removed by Filter: 1395189

-> Aggregate (cost=160491.03..160491.04 rows=1 width=0) (actual time=1039.563..1039.563 rows=1 loops=1)

-> Seq Scan on t1 t1_1 (cost=0.00..160483.12 rows=3163 width=0) (actual time=901.577..1039.307 rows=2835 loops=1)

Filter: ((NOT deleted) AND ((status)::text = '02'::text))

Rows Removed by Filter: 1392354

Planning time: 0.417 ms

Execution time: 6251.024 ms

(11 rows)

ai=> select count(*) from t_ai_prd_item;

count

---------

1395189

(1 row)

ai=> select count(*),status from t_ai_prd_item group by status;

count | status

---------+--------

364 | 04

25 | 05

2835 | 02

1391965 | 06

(4 rows)

ai=>

分析发现,表1中有1395189条记录,status=’01’的记录为0,status=’02’的记录为2835条。

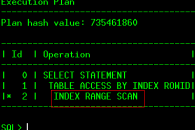

2 那么在status字段上建立一个btree index,效果会怎么样呢?

ai=> create index idx_status on t1(status);

CREATE INDEX

ai=> explain analyze select '01' status, count(1) from t1 where status = '01' and deleted = false

ai-> union all

ai-> select '02' status, count(1) from t1 where status = '02' and deleted = false;

QUERY PLAN

----------------------------------------------------------------------------------------------------------------------------------------

Append (cost=8.45..730.24 rows=2 width=40) (actual time=0.037..3.999 rows=2 loops=1)

-> Aggregate (cost=8.45..8.46 rows=1 width=0) (actual time=0.036..0.036 rows=1 loops=1)

-> Index Scan using idx_status on t1 (cost=0.43..8.45 rows=1 width=0) (actual time=0.033..0.033 rows=0 loops=1)

Index Cond: ((status)::text = '01'::text)

Filter: (NOT deleted)

-> Aggregate (cost=721.76..721.77 rows=1 width=0) (actual time=3.962..3.962 rows=1 loops=1)

-> Index Scan using idx_status on t1 t1_1 (cost=0.43..714.10 rows=3063 width=0) (actual time=0.029..3.673 rows=2835 loops=1)

Index Cond: ((status)::text = '02'::text)

Filter: (NOT deleted)

Planning time: 0.291 ms

Execution time: 4.067 ms

(11 rows)

ai=>

效果不错,但是结合到实际业务场景,此处只是针对status=’01’和status=’02’的情况来做统计。那么有没有更好的方法来解决这个场景呢?

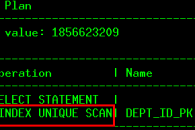

3 创建1个partial index来测试

ai=> create index idx_partial on t1(status) where (status='01' or status='02');

CREATE INDEX

ai=> explain analyze select '01' status, count(1) from t1 where status = '01' and deleted = false

ai-> union all

ai-> select '02' status, count(1) from t1 where status = '02' and deleted = false;

QUERY PLAN

----------------------------------------------------------------------------------------------------------------------------------------

Append (cost=8.30..732.19 rows=2 width=40) (actual time=0.019..3.916 rows=2 loops=1)

-> Aggregate (cost=8.30..8.31 rows=1 width=0) (actual time=0.018..0.018 rows=1 loops=1)

-> Index Scan using idx_partial on t1 (cost=0.28..8.30 rows=1 width=0) (actual time=0.015..0.015 rows=0 loops=1)

Index Cond: ((status)::text = '01'::text)

Filter: (NOT deleted)

-> Aggregate (cost=723.85..723.86 rows=1 width=0) (actual time=3.897..3.897 rows=1 loops=1)

-> Index Scan using idx_partial on t1 t1_1 (cost=0.28..716.20 rows=3063 width=0) (actual time=0.030..3.599 rows=2835 loops=1)

Index Cond: ((status)::text = '02'::text)

Filter: (NOT deleted)

Planning time: 0.234 ms

Execution time: 3.992 ms

(11 rows)

ai=>

对比,发现SQL的执行效率几乎没有差别。但是:

ai=> \di+ idx_status

List of relations

Schema | Name | Type | Owner | Table | Size | Description

--------+------------+-------+-------+-------+-------+-------------

ai | idx_status | index | ai | t1 | 30 MB |

(1 row)

ai=> \di+ idx_partial

List of relations

Schema | Name | Type | Owner | Table | Size | Description

--------+-------------+-------+-------+-------+-------+-------------

ai | idx_partial | index | ai | t1 | 80 kB |

(1 row)

ai=>

4 小结:

在确保SQL执行效率的同时,这个partial index所占的存储空间是b-tree index的1/384,大大降低了存储空间的开销。

三 关于partial index

1 什么是partial index?

分区索引是基于表中部分数据建立的,被索引的部分数据是原数据的一个子集。这个子集数据是基于某些条件可以明确计算出来的。

2 partial index适用场景?

对于表中数据分布“不均匀”的场景,比如某些数据占据绝大多数,而其它一些数据只占少量,且要满足查询条件针是对那些小数据量的查询情形。此例正好满足这种情况,总数据量为140万左右,而状态为01和02的数据只占极少的比例,且查询是针对状态为01和02的查询。

通过partial index可以排除大量普通数据或业务上“不感兴趣”的数据。如:一个在线订单系统,可以针对那些不在经常访问的客户端IP范围之外的IP进行创建分区索引,或者针对已下单还未支付的订单进行分区索引的创建。这样,当查询那些不在常用IP范围内的订单,或者那些已下单未支付的订单信息时,可以保证查询效率。

3 partial index的优势?

由于其是针对部分数据进行索引,显然同传统b-tree索引相比,其所占磁盘空间将大大缩小。当重建维护这些分区索引时,速度也非常快。

4 参考

partial index官方文档地址:

https://www.postgresql.org/docs/9.3/static/indexes-partial.html