8 安装grid软件

解压grid安装介质:

[root@node1 ~]# unzip p13390677_112040_Linux-x86-64_3of7.zip

解压出第3个压缩包,解压之后,将grid文件mv到/home/oracle家目录下:

[root@node1 ~]# ll

total 3667892

drwxr-xr-x 4 root root 4096 Dec 24 17:34 10gbackup

-rw-r–r– 1 root root 1016 Dec 19 13:50 7oracle_.bash_profile.sh

-rw——- 1 root root 1396 Dec 19 10:50 anaconda-ks.cfg

drwxr-xr-x 2 root root 4096 Dec 19 16:29 Desktop

-rw-r–r– 1 root root 52733 Dec 19 10:50 install.log

-rw-r–r– 1 root root 4077 Dec 19 10:50 install.log.syslog

drwxr-xr-x 7 root root 4096 Dec 24 15:51 media

-rw-r–r– 1 root root 1395582860 Dec 24 20:04 p13390677_112040_Linux-x86-64_1of7.zip

-rw-r–r– 1 root root 1151304589 Dec 24 20:05 p13390677_112040_Linux-x86-64_2of7.zip

-rw-r–r– 1 root root 1205251894 Dec 24 20:06 p13390677_112040_Linux-x86-64_3of7.zip

[root@node1 ~]# unzip p13390677_112040_Linux-x86-64_3of7.zip

….

….

inflating: grid/rpm/cvuqdisk-1.0.9-1.rpm

inflating: grid/runcluvfy.sh

inflating: grid/welcome.html

[root@node1 ~]# ll

total 3667900

drwxr-xr-x 4 root root 4096 Dec 24 17:34 10gbackup

-rw-r–r– 1 root root 1016 Dec 19 13:50 7oracle_.bash_profile.sh

-rw——- 1 root root 1396 Dec 19 10:50 anaconda-ks.cfg

drwxr-xr-x 2 root root 4096 Dec 19 16:29 Desktop

drwxr-xr-x 7 root root 4096 Aug 27 12:33 grid

-rw-r–r– 1 root root 52733 Dec 19 10:50 install.log

-rw-r–r– 1 root root 4077 Dec 19 10:50 install.log.syslog

drwxr-xr-x 7 root root 4096 Dec 24 15:51 media

-rw-r–r– 1 root root 1395582860 Dec 24 20:04 p13390677_112040_Linux-x86-64_1of7.zip

-rw-r–r– 1 root root 1151304589 Dec 24 20:05 p13390677_112040_Linux-x86-64_2of7.zip

-rw-r–r– 1 root root 1205251894 Dec 24 20:06 p13390677_112040_Linux-x86-64_3of7.zip

[root@node1 ~]#

Root将解压出来的grid目录移动到oracle用户的家目录:

[root@node1 ~]# mv grid/ /home/oracle/

oracle 用户登录图形界面:

进入图形安装界面:

Next:

Next:

Next:

Next:SCAN name输入之前在/etc/hosts文件中配置好的scan-cluster.oracleonlinux.cn

Next:

添加节点2信息:node2.oracleonlinux.cn、node2-vip.oracleonlinux.cn:

Next:

Next:

Next:

Next:

Next:

Next:

Next:

Next:

Next:指定在第3步骤中创建的ORACLE_BASE、ORACELE_HOME:

Next:

单击Fix& CheckAgain:

按照提示:

节点1:

[root@node1 ~]# /tmp/CVU_11.2.0.4.0_oracle/runfixup.sh

Response file being used is :/tmp/CVU_11.2.0.4.0_oracle/fixup.response

Enable file being used is :/tmp/CVU_11.2.0.4.0_oracle/fixup.enable

Log file location: /tmp/CVU_11.2.0.4.0_oracle/orarun.log

Setting Kernel Parameters…

fs.file-max = 101365

fs.file-max = 6815744

The upper limit of ip_local_port range in response file is not greater than value in /etc/sysctl.conf, hence not changing it.

The upper limit of ip_local_port range in response file is not greater than value for current session, hence not changing it.

net.ipv4.ip_local_port_range = 9000 65500

net.core.rmem_max=1048576

net.core.rmem_max = 4194304

net.core.wmem_max=262144

net.core.wmem_max = 1048576

fs.aio-max-nr = 1048576

Installing Package /tmp/CVU_11.2.0.4.0_oracle//cvuqdisk-1.0.9-1.rpm

Preparing… ########################################### [100%]

1:cvuqdisk ########################################### [100%]

[root@node1 ~]#

节点2:

[root@node2 ~]# /tmp/CVU_11.2.0.4.0_oracle/runfixup.sh

Response file being used is :/tmp/CVU_11.2.0.4.0_oracle/fixup.response

Enable file being used is :/tmp/CVU_11.2.0.4.0_oracle/fixup.enable

Log file location: /tmp/CVU_11.2.0.4.0_oracle/orarun.log

Setting Kernel Parameters…

fs.file-max = 101365

fs.file-max = 6815744

The upper limit of ip_local_port range in response file is not greater than value in /etc/sysctl.conf, hence not changing it.

The upper limit of ip_local_port range in response file is not greater than value for current session, hence not changing it.

net.ipv4.ip_local_port_range = 9000 65500

net.core.rmem_max=1048576

net.core.rmem_max = 4194304

net.core.wmem_max=262144

net.core.wmem_max = 1048576

fs.aio-max-nr = 1048576

Installing Package /tmp/CVU_11.2.0.4.0_oracle//cvuqdisk-1.0.9-1.rpm

Preparing… ########################################### [100%]

1:cvuqdisk ########################################### [100%]

[root@node2 ~]#

执行之后,提示缺少软件包:

安装缺失的软件包:

节点1:

[root@node1 ~]# ll

total 280

drwxr-xr-x 4 root root 4096 Dec 26 21:16 10gbackup

-rw——- 1 root root 1434 Dec 26 15:32 anaconda-ks.cfg

-rw-r–r– 1 root root 54649 Dec 26 15:32 install.log

-rw-r–r– 1 root root 4077 Dec 26 15:32 install.log.syslog

-rw-r–r– 1 root root 11675 Dec 26 21:47 libaio-devel-0.3.106-5.x86_64.rpm

drwxr-xr-x 7 root root 4096 Dec 26 21:28 media

-rw-r–r– 1 root root 178400 Dec 26 21:47 sysstat-7.0.2-3.el5.x86_64.rpm

[root@node1 ~]# rpm -ivh libaio-devel-0.3.106-5.x86_64.rpm

warning: libaio-devel-0.3.106-5.x86_64.rpm: Header V3 DSA signature: NOKEY, key ID 1e5e0159

Preparing… ########################################### [100%]

1:libaio-devel ########################################### [100%]

[root@node1 ~]# rpm -ivh sysstat-7.0.2-3.el5.x86_64.rpm

warning: sysstat-7.0.2-3.el5.x86_64.rpm: Header V3 DSA signature: NOKEY, key ID 1e5e0159

Preparing… ########################################### [100%]

1:sysstat ########################################### [100%]

[root@node1 ~]#

节点2:

[root@node2 ~]# ll

total 280

drwxr-xr-x 4 root root 4096 Dec 26 21:17 10gbackup

-rw——- 1 root root 1434 Dec 26 15:40 anaconda-ks.cfg

-rw-r–r– 1 root root 54649 Dec 26 15:40 install.log

-rw-r–r– 1 root root 4077 Dec 26 15:40 install.log.syslog

-rw-r–r– 1 root root 11675 Dec 26 21:48 libaio-devel-0.3.106-5.x86_64.rpm

drwxr-xr-x 7 root root 4096 Dec 26 21:09 media

-rw-r–r– 1 root root 178400 Dec 26 21:48 sysstat-7.0.2-3.el5.x86_64.rpm

[root@node2 ~]# rpm -ivh libaio-devel-0.3.106-5.x86_64.rpm

warning: libaio-devel-0.3.106-5.x86_64.rpm: Header V3 DSA signature: NOKEY, key ID 1e5e0159

Preparing… ########################################### [100%]

1:libaio-devel ########################################### [100%]

[root@node2 ~]# rpm -ivh sysstat-7.0.2-3.el5.x86_64.rpm

warning: sysstat-7.0.2-3.el5.x86_64.rpm: Header V3 DSA signature: NOKEY, key ID 1e5e0159

Preparing… ########################################### [100%]

1:sysstat ########################################### [100%]

[root@node2 ~]#

Next:

Next:

Next:

执行:

节点1:

[root@node1 ~]# /u01/app/11.2.0/grid/root.sh

Performing root user operation for Oracle 11g

The following environment variables are set as:

ORACLE_OWNER= oracle

ORACLE_HOME= /u01/app/11.2.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The file “dbhome” already exists in /usr/local/bin. Overwrite it? (y/n)

[n]:

The file “oraenv” already exists in /usr/local/bin. Overwrite it? (y/n)

[n]:

The file “coraenv” already exists in /usr/local/bin. Overwrite it? (y/n)

[n]:

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Using configuration parameter file: /u01/app/11.2.0/grid/crs/install/crsconfig_params

Creating trace directory

Installing Trace File Analyzer

OLR initialization – successful

root wallet

root wallet cert

root cert export

peer wallet

profile reader wallet

pa wallet

peer wallet keys

pa wallet keys

peer cert request

pa cert request

peer cert

pa cert

peer root cert TP

profile reader root cert TP

pa root cert TP

peer pa cert TP

pa peer cert TP

profile reader pa cert TP

profile reader peer cert TP

peer user cert

pa user cert

Adding Clusterware entries to inittab

CRS-2672: Attempting to start ‘ora.mdnsd’ on ‘node1’

CRS-2676: Start of ‘ora.mdnsd’ on ‘node1’ succeeded

CRS-2672: Attempting to start ‘ora.gpnpd’ on ‘node1’

CRS-2676: Start of ‘ora.gpnpd’ on ‘node1’ succeeded

CRS-2672: Attempting to start ‘ora.cssdmonitor’ on ‘node1’

CRS-2672: Attempting to start ‘ora.gipcd’ on ‘node1’

CRS-2676: Start of ‘ora.gipcd’ on ‘node1’ succeeded

CRS-2676: Start of ‘ora.cssdmonitor’ on ‘node1’ succeeded

CRS-2672: Attempting to start ‘ora.cssd’ on ‘node1’

CRS-2672: Attempting to start ‘ora.diskmon’ on ‘node1’

CRS-2676: Start of ‘ora.diskmon’ on ‘node1’ succeeded

CRS-2676: Start of ‘ora.cssd’ on ‘node1’ succeeded

ASM created and started successfully.

Disk Group griddg created successfully.

clscfg: -install mode specified

Successfully accumulated necessary OCR keys.

Creating OCR keys for user ‘root’, privgrp ‘root’..

Operation successful.

CRS-4256: Updating the profile

Successful addition of voting disk 1ddd6122f4024f75bf5bba77ba8af58b.

Successfully replaced voting disk group with +griddg.

CRS-4256: Updating the profile

CRS-4266: Voting file(s) successfully replaced

## STATE File Universal Id File Name Disk group

— —– —————– ——— ———

1. ONLINE 1ddd6122f4024f75bf5bba77ba8af58b (ORCL:ASMDISK3) [GRIDDG]

Located 1 voting disk(s).

CRS-2672: Attempting to start ‘ora.asm’ on ‘node1’

CRS-2676: Start of ‘ora.asm’ on ‘node1’ succeeded

CRS-2672: Attempting to start ‘ora.GRIDDG.dg’ on ‘node1’

CRS-2676: Start of ‘ora.GRIDDG.dg’ on ‘node1’ succeeded

Configure Oracle Grid Infrastructure for a Cluster … succeeded

[root@node1 ~]#

节点2:

[root@node2 ~]# /u01/app/11.2.0/grid/root.sh

Performing root user operation for Oracle 11g

The following environment variables are set as:

ORACLE_OWNER= oracle

ORACLE_HOME= /u01/app/11.2.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The file “dbhome” already exists in /usr/local/bin. Overwrite it? (y/n)

[n]:

The file “oraenv” already exists in /usr/local/bin. Overwrite it? (y/n)

[n]:

The file “coraenv” already exists in /usr/local/bin. Overwrite it? (y/n)

[n]:

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Using configuration parameter file: /u01/app/11.2.0/grid/crs/install/crsconfig_params

Creating trace directory

Installing Trace File Analyzer

OLR initialization – successful

Adding Clusterware entries to inittab

CRS-4402: The CSS daemon was started in exclusive mode but found an active CSS daemon on node node1, number 1, and is terminating

An active cluster was found during exclusive startup, restarting to join the cluster

Configure Oracle Grid Infrastructure for a Cluster … succeeded

[root@node2 ~]#

Next:

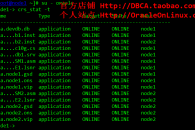

执行grid软件$ORACLE_HOME/bin下的crs_stat来查看集群信息:

node1-> /u01/app/11.2.0/grid/bin/crs_stat -t

Name Type Target State Host

————————————————————

ora.GRIDDG.dg ora….up.type ONLINE ONLINE node1

ora….ER.lsnr ora….er.type ONLINE ONLINE node1

ora….N1.lsnr ora….er.type ONLINE ONLINE node1

ora.asm ora.asm.type ONLINE ONLINE node1

ora.cvu ora.cvu.type ONLINE ONLINE node1

ora.gsd ora.gsd.type OFFLINE OFFLINE

ora….network ora….rk.type ONLINE ONLINE node1

ora….SM1.asm application ONLINE ONLINE node1

ora….E1.lsnr application ONLINE ONLINE node1

ora.node1.gsd application OFFLINE OFFLINE

ora.node1.ons application ONLINE ONLINE node1

ora.node1.vip ora….t1.type ONLINE ONLINE node1

ora….SM2.asm application ONLINE ONLINE node2

ora….E2.lsnr application ONLINE ONLINE node2

ora.node2.gsd application OFFLINE OFFLINE

ora.node2.ons application ONLINE ONLINE node2

ora.node2.vip ora….t1.type ONLINE ONLINE node2

ora.oc4j ora.oc4j.type ONLINE ONLINE node1

ora.ons ora.ons.type ONLINE ONLINE node1

ora….ry.acfs ora….fs.type ONLINE ONLINE node1

ora.scan1.vip ora….ip.type ONLINE ONLINE node1

node1->